Questions

In pharmaceutical analysis, accurately determining the Limit of Detection (LOD) and Limit of Quantitation (LOQ) is essential during method validation—especially for detecting impurities and quantifying trace-level components.

What Are LOD and LOQ?

✅ LOD is the lowest concentration of an analyte that can be reliably detected, though not necessarily measured.

✅ LOQ is the lowest concentration that can be quantified with acceptable precision and accuracy.

Common Methods for Determining LOD & LOQ

(As per ICH Q2(R2) guidelines)

1. Signal-to-Noise Ratio (S/N) Method

LOD: S/N ≈ 3:1

LOQ: S/N ≈ 10:1

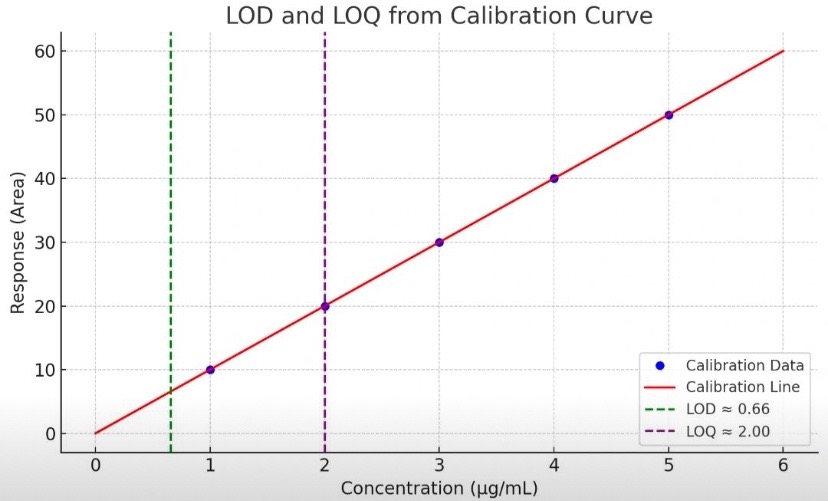

2. Standard Deviation and Slope Method (σ/S)

A linear calibration curve is plotted with:

X-axis: Concentration (e.g., ppm or µg/mL)

Y-axis: Instrument response (e.g., peak area or height)

Then use:

LOD = 3.3 × (σ/S) → Point just above the noise level

LOQ = 10 × (σ/S) → Lowest reliably quantifiable point

Graph details:

Green dashed line: LOD

Purple dashed line: LOQ

Red line: Calibration curve

3. Visual Evaluation or Dilution Method

Particularly useful for non-instrumental techniques—LOD and LOQ are estimated by observing the analyte's detectability at decreasing concentrations.

✔️ Why Is It Important?

Accurate determination of LOD and LOQ ensures compliance with regulatory standards (ICH Q2(R2)), and is critical for monitoring impurities that may impact the safety and efficacy of pharmaceutical products.

Best Practices:

Always use freshly prepared standards

Evaluate LOD/LOQ during precision and recovery studies

Ensure good peak shape and clear baseline resolution

No answers yet.