Why Assuming RRF = 1.0 Risks Toxic Impurity Errors

In HPLC analysis, the biggest lie a chromatogram can tell you is that "bigger peak = more amount."

We all know that different molecules interact with detectors differently. Yet, assuming a Relative Response Factor (RRF) of 1.0 for impurities is still a common shortcut that can lead to dangerous under- or over-estimation of toxic impurities.

Why RRF Determination is Non-Negotiable:

Chromophore Differences: Just because it’s an isomer doesn’t mean it absorbs UV light the same way.

Wavelength Sensitivity: An RRF valid at 254 nm might be completely wrong at 210 nm.

Regulatory Impact: ICH guidelines (Q3A/Q3B) often require correction factors if the response differs by more than 10-20% (0.8–1.2).

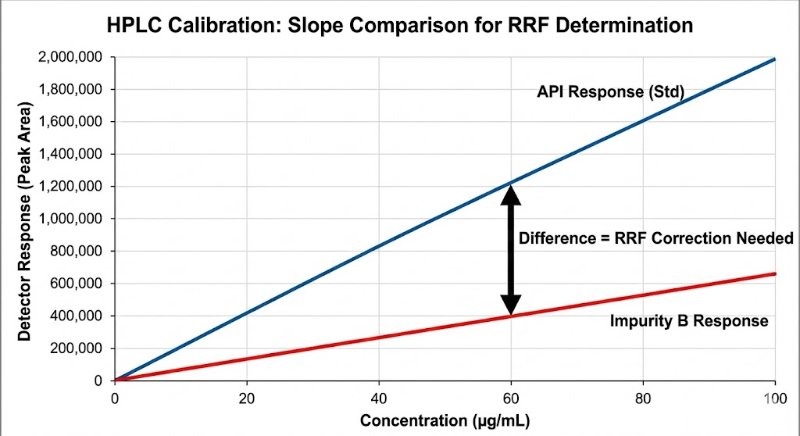

The "Slope Method" Gold Standard: To get a true RRF, avoid the single-point check. You need to compare the slopes of the linearity curves (Impurity vs. API) to rule out matrix effects and intercept bias.

RRF = Slope (Impurity) / Slope (API)

If you aren't correcting for response factors, are you really quantifying? Or are you just guessing?

How does your lab handle RRFs for unknown impurities? Do you default to 1.0 or run full slope-method determinations?